Loss Functions in Machine Learning

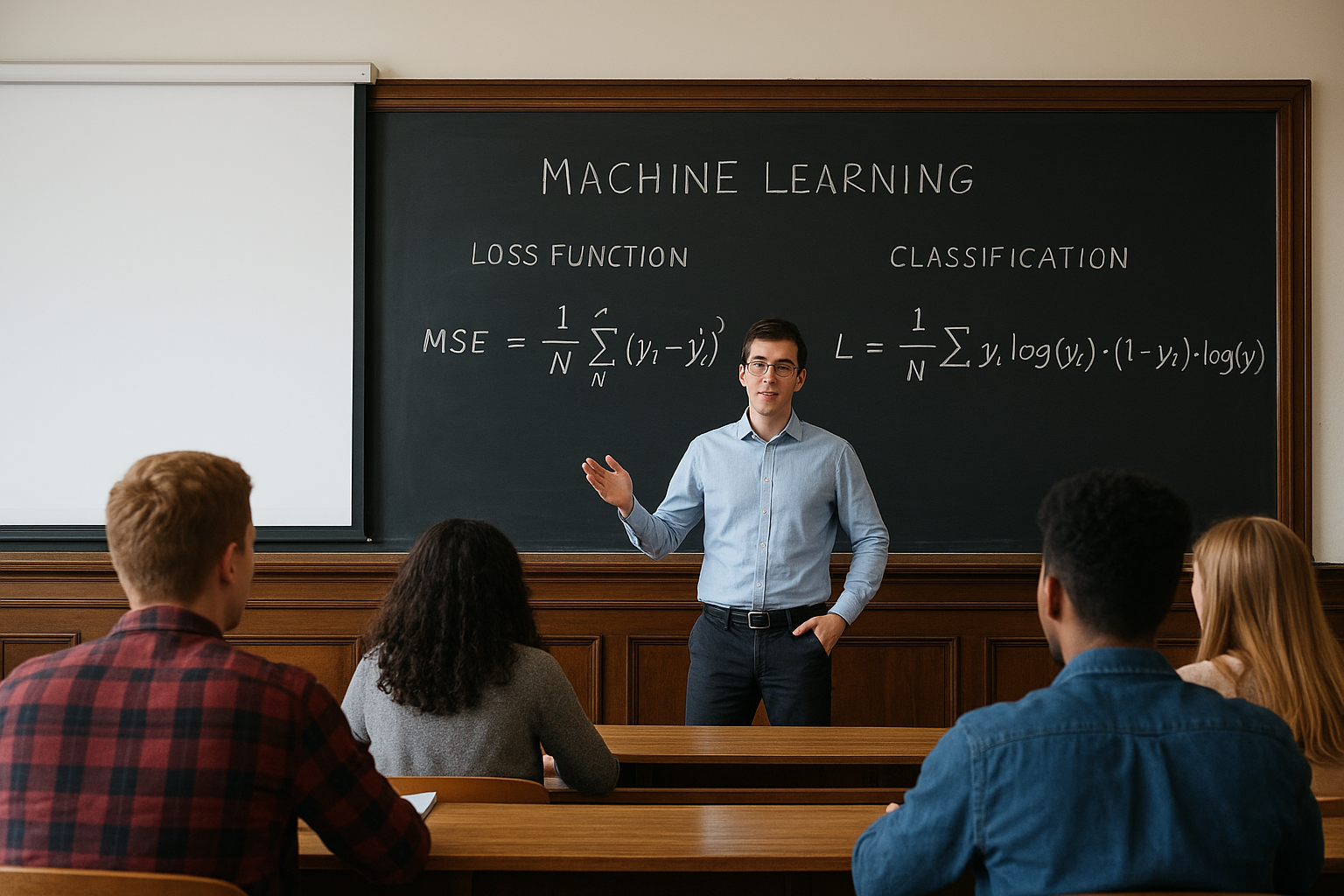

Loss functions are the foundation of machine learning, guiding models to improve by measuring how far predictions are from actual outcomes. Mean Squared Error (MSE) is commonly used for regression to penalize large prediction errors, while Cross Entropy Loss is the standard for classification, penalizing incorrect probability estimates, especially when models are confidently wrong.

Understanding Loss Functions in Machine Learning

In the world of machine learning, models are built to make predictions — whether it’s forecasting house prices, classifying images, or detecting spam emails. But how do we measure whether a model is doing a good job? The answer lies in loss functions.

Loss functions are at the heart of machine learning. They provide a numerical measure of how well (or poorly) a model’s predictions match the actual outcomes. By quantifying the “cost of being wrong,” loss functions guide the optimization process, telling the model how to adjust its parameters to improve performance.

Put simply:

- Loss functions act as the compass of machine learning.

- They point the optimization algorithm in the direction that minimizes errors.

There are many loss functions, each tailored to different types of problems. Among the most widely used are Mean Squared Error (MSE) and Cross Entropy Loss. Let’s explore both in detail.

Mean Squared Error (MSE)

Mean Squared Error (MSE) is one of the most common loss functions, especially for regression problems. It measures the average squared difference between the predicted values and the actual values.

The formula for MSE is:

Where:

- 𝑦 = actual value

- ŷ = predicted value

- 𝑛 = number of data points

Why square the errors?

Squaring ensures that all error values are positive, and it penalizes large mistakes much more heavily than small ones. This makes MSE particularly useful when big errors are especially undesirable.

Example:

Suppose we're predicting house prices with a regression line. The distance between each actual price and the predicted line represents the error. By squaring these errors, outliers (like an overpriced luxury home) have a larger impact, pushing the model to adjust more carefully.

Key Properties of MSE

- Best for regression tasks

- Sensitive to outliers (a single large error can dominate the loss)

- Encourages the model to reduce large deviations in predictions

MSE Calculations in Python

To calculate the the MSE in Python, you can follow the next steps:

You can subsequently plot the true vs predicted values, and the squared errors:

This will lead to the following visualization of the Means Squared Error:

Cross Entropy Loss

While MSE is ideal for regression, Cross Entropy Loss is the standard choice for classification tasks. It measures the difference between two probability distributions:

- The true distribution (the actual labels)

- The predicted probability distribution (what the model outputs)

The formula for Cross Entropy (for binary classification) is:

Where:

- y = actual class (0 or 1)

- ŷ = predicted probability of class 1

How it works

Cross Entropy heavily penalizes cases where the model is confidently wrong. If the true label is 1 but the model predicts a probability close to 0, the loss is very high. Conversely, if the model assigns high probability to the correct class, the loss is small.

Example

Consider classifying emails as spam or not spam. If the model predicts 95% probability for "spam" and the email is indeed spam, the loss is minimal. But if it predicts 95% "not spam" when the email is actually spam, the loss is very large.

Key Properties of Cross Entropy

- Best for classification tasks

- Focuses on probabilities rather than raw predictions

- Strongly penalizes confident misclassifications

- Encourages models to become more accurate in probability estimation

CE Calculations in Python

To calculate the the Cross Entropy Loss in Python, you can follow the next steps. Please note that this example only considers binary classification (e.g., cat vs. dog).

Next, we can plot how CE changes as predicted probability changes for a single true label:

This will lead to the following visualization of the Cross Entropy Loss:

This figure showcase a curve that illustrates how the loss is very high when predictions are wrong and low when predictions are correct.

Comparing MSE and Cross Entropy

The following table shows the comparison of the MSE and Cross Entropy Loss Functions:

| Aspect | Mean Squared Error (MSE) | Cross Entropy Loss |

|---|---|---|

| Used For: | Regression problems | Classification problems |

| Output Type: | Continuous values | Probabilities |

| Error Treatment | Squares differences, penalizes large errors | Penalizes incorrect probabilities, especially confident wrong predictions |

| Sensitivity | Sensitive to outliers | Sensitive to probability estimates |

Conclusion

Loss functions are central to machine learning because they guide models in the right direction during training. Mean Squared Error (MSE) is the go-to choice for regression problems, where predictions are continuous values and minimizing squared differences is key. Cross Entropy Loss is the standard for classification tasks, where comparing predicted probabilities to true labels ensures accurate and reliable categorization.

By understanding these two core loss functions, data scientists gain deeper insight into how models learn — and how to choose the right tool for the right problem.

Knowledge - Certification - Community