Reinforcement Learning: An Introductory Overview

Reinforcement learning is a powerful branch of machine learning where agents learn to make decisions by interacting with their environment and receiving feedback in the form of rewards or penalties. This article provides a beginner-friendly yet in-depth overview of key reinforcement learning concepts, algorithms, and real-world applications. Reinforcement learning is a powerful branch of machine learning where agents learn to make decisions by interacting with their environment and receiving feedback in the form of rewards or penalties. This article provides a beginner-friendly yet in-depth overview of key reinforcement learning concepts, algorithms, and real-world applications.

What is Reinforcement Learning?

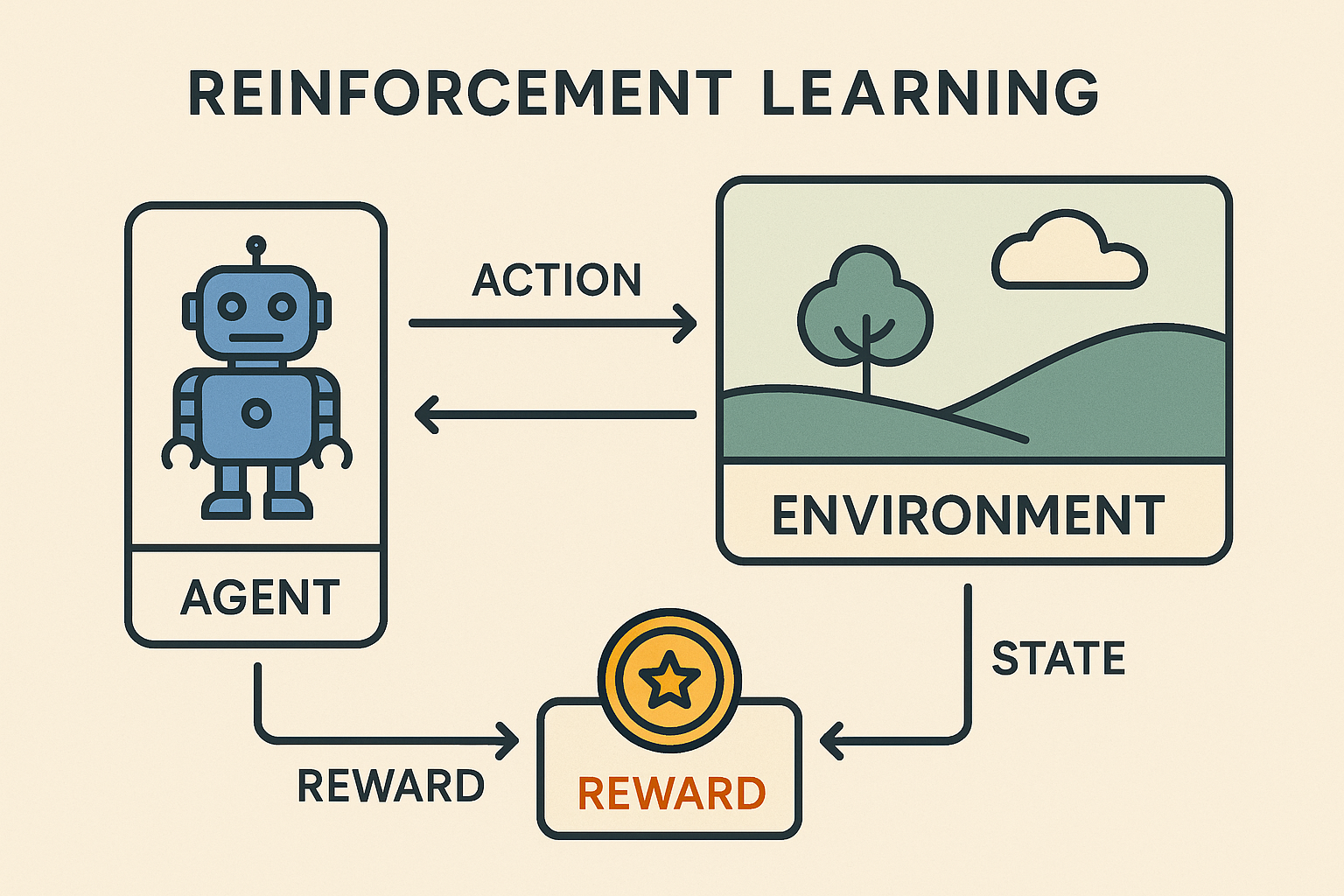

Reinforcement Learning (RL) is a type of machine learning where an agent learns by interacting with an environment and receiving feedback in the form of rewards or penalties. In simple terms, the agent takes actions in its world, and if those actions lead to a desirable outcome (reward), it is more likely to repeat them in the future; if they lead to a bad outcome (penalty), it will tend to avoid them. As one popular analogy describes, an RL agent learns much like a pet being trained with treats and scolding – it is “penalized when it makes the wrong decisions and rewarded when it makes the right ones”. Over time and many trials, the agent discovers which sequences of actions maximize its cumulative reward (the return).

Reinforcement learning involves several core elements:

- Agent: the learner or decision maker (e.g. a robot, program, or game player).

- Environment: everything the agent interacts with (e.g. a game, a room, or an online system).

- State/Observation: the information the agent perceives about the environment at a given time (for example, a camera image of the room, or the current screen in a game).

- Action: a choice the agent can make (like moving left or right, accelerating a car, or selecting a recommendation).

- Reward: a numerical feedback from the environment that tells the agent how well it is doing (higher reward for good outcomes, lower or negative reward for bad outcomes).

At each step, the agent observes the current state (or part of it), chooses an action, and the environment then transitions to a new state and emits a reward. The agent’s goal is to maximize its total reward over time. In other words, it must learn which actions in which situations (states) lead to long-term success, not just immediate gain. This often involves discovering how to trade off short-term rewards for better long-term benefits (the so-called delayed reward problem).

Q-Learning: Learning Action Values

One of the simplest and most famous RL algorithms is Q-learning. Q-learning is a value-based method: it learns a table or function of Q-values that estimate how good each action is in each state. . Here “Q” stands for “quality” of a state-action pair. Conceptually, Q-learning works like this: initially the agent has no idea which actions are good, so it tries them and collects rewards. Every time the agent takes action A in state S and observes a reward, it updates its estimate Q(S,A) toward the sum of that reward plus the maximum estimated future reward obtainable from the next state. Over many trials, the Q-values converge to the true expected rewards of actions. In practice, the agent often uses a simple rule (called ε-greedy) to balance exploration and exploitation: most of the time it takes the best-known action so far (highest Q-value), but some of the time it tries a random action to discover new possibilities.

- Key ideas of Q-learning: It is model-free (does not assume a known model of the environment) and off-policy. It gradually learns the “quality” of actions by updating Q(S,A) using rewards and future estimates. For example, imagine a robot in a maze where the exit is worth 10 points. As it tries different paths, Q-learning will give higher values to moves that lead to the exit faster. At a junction, if moving right ultimately reaches the exit sooner than moving left, the Q-value of the “move right” action will become higher than “move left”. Eventually the agent “learns” the fastest path.

- What it solves: Q-learning is well suited for problems with discrete states and actions, where you can estimate values for each possible action and gradually improve them. It is guaranteed to find an optimal policy (best actions) given enough exploration time. However, in very large or continuous state spaces (e.g. raw image inputs or many continuous variables), keeping and updating a full Q-table is impractical. This leads to deep Q-learning, discussed below, where neural networks approximate the Q-values.

Policy Gradient Methods: Directly Optimizing the Policy

An alternative class of algorithms, known as policy gradient methods, learns a policy directly instead of a value function. A policy is simply the agent’s decision-making strategy – typically expressed as a probability distribution over actions given each state. Policy gradient algorithms adjust the parameters of this policy (for example, the weights of a neural network) to maximize the expected reward. In essence, they perform gradient ascent on the expected return with respect to the policy parameters.

- Key ideas of policy gradients: These methods “directly learn the optimal policy by applying gradient ascent on the expected reward”. The policy is parameterized (often by a neural network) so that given a state it outputs action probabilities. The algorithm uses the received rewards to compute an estimated gradient that tells it how to change the policy parameters to get higher reward. In practice, this often involves running many episodes (simulated playthroughs) and using the collected data to nudge the policy toward more rewarding actions.

- Why use them: Policy gradients shine in situations where actions are too numerous or continuous to handle with a value table. For example, a robot with arms moving in continuous space has infinitely many possible motions; representing a value for each is impossible. Policy methods can smoothly adjust a continuous policy. In fact, policy methods can handle high-dimensional or continuous action spaces more easily than value-based methods. As one explanation notes, value-based Q-learning must estimate a value for every possible action at each state, which becomes intractable when there are many or continuous actions. By contrast, policy gradient methods “parametrize the policy and estimate the gradient of the cumulative rewards with respect to the policy parameters”, directly improving the policy itself.

- Stochastic policies and exploration: Policy gradient algorithms often produce stochastic policies, meaning the policy assigns probabilities to actions. Each time the agent is in a state, it samples an action according to these probabilities. This inherently encourages exploration – even in the same situation the agent might occasionally try a non-greedy action. Over time, actions that lead to higher rewards get their probabilities increased. This approach is especially useful in complex tasks where exploration is crucial to find the best strategy.

Deep Reinforcement Learning

Deep Reinforcement Learning (Deep RL) refers to combining reinforcement learning algorithms with deep neural networks. Neural networks can serve as powerful function approximators for the policy or for value functions. This allows RL to scale to high-dimensional inputs (like images or sensor readings) and complex problems. In practice, deep networks are used to approximate the agent’s policy or its Q-values, enabling end-to-end learning from raw inputs.

- Deep Q-Networks (DQN): One of the first breakthroughs was DeepMind’s Deep Q-Network (DQN), which used a convolutional neural network to estimate Q-values directly from raw pixels of Atari video games. This allowed the agent to learn to play games like Pong or Breakout from just the screen image. The neural network takes the game screen (state) as input and outputs Q-values for each possible joystick movement (action). The agent then plays many games, updates the network based on rewards, and eventually learns human-level or better gameplay.

- Neural nets + RL successes: In general, deep RL has enabled many impressive achievements. For instance, by combining deep neural networks with RL, algorithms like AlphaGo were created – AlphaGo used deep nets to evaluate Go positions and learn good moves, ultimately defeating the world champion of Go. Deep RL agents have also mastered dozens of Atari games from pixels, and even complex video games like StarCraft II and Dota. According to one tutorial, “reinforcement algorithms that incorporate deep neural networks can beat human experts playing numerous Atari video games, Starcraft II and Dota-2”.

- How it works conceptually: In deep RL, the neural network often plays the role of either the Q-function or the policy. For example, in a policy-gradient approach, a neural network might take the state as input and output the probabilities of each action. Training adjusts the network weights so that actions leading to higher rewards become more likely. In a value-based approach, a neural network might predict Q-values for each action; training adjusts its weights so its value estimates match the rewards it observes. In both cases, the combination of trial-and-error learning (RL) with neural nets’ ability to generalize from large inputs is what makes deep RL powerful.

applications of reinforcement Learning

Reinforcement Learning has found many real-world applications where decision-making over time is crucial. Some illustrative examples include:

- Gaming and Simulations: RL has been famously used to train agents to play games at superhuman levels. Examples include board games like Go and Chess (DeepMind’s AlphaGo and AlphaZero), competitive video games like StarCraft II and Dota 2, and classic Atari video games from raw pixels. These successes highlight RL’s ability to learn complex strategies by maximizing long-term score.

- Robotics and Control: In robotics, RL teaches robots to perform tasks such as walking, grasping objects, or flying drones. For example, RL has been used to train robotic arms in factories and to enable robots to follow instructions or navigate terrain. Companies like Covariant.ai use deep RL for industrial robotic picking, and research labs have applied it to robot locomotion. One review notes that deep RL is being applied to robotics in both simulated and real-world settings.

- Autonomous Systems: RL is applied in autonomous vehicles, energy management, and system controls. For example, Google’s DeepMind used RL to improve data center cooling efficiency, and researchers use RL for autonomous flight control. Self-driving car systems also experiment with RL components for planning and decision-making. (These applications leverage RL’s strength in sequential decision-making under uncertainty.)

- Recommendation and Personalization: Online platforms (streaming, e-commerce, news) have begun using RL to personalize content in a way that maximizes long-term user engagement. Unlike traditional recommenders that only optimize immediate clicks, an RL-based recommender can learn to suggest items that keep a user engaged over time. As one source explains, RL “can learn to optimize for long-term rewards, balance exploration and exploitation, and continuously learn online” – qualities that fit recommendation scenario. For instance, services like Netflix or YouTube may treat each user session as an environment: the “agent” recommends videos and receives rewards based on watch time or satisfaction, gradually learning a better personalization policy.

- Finance and Industry: In finance, RL algorithms are used for algorithmic trading strategies, where the “agent” makes buy/sell decisions to maximize profit. In industrial settings, RL optimizes supply chains, inventory control, and robotics in warehouses (e.g., RL is used to optimize material handling in factories, as reported by AI industry research.

These examples illustrate how RL enables solutions to problems where decisions have long-term consequences and explicit reward feedback is available. RL is most effective when the problem can be formulated as an agent taking actions in a clearly defined environment with quantifiable goals.

Summary

Reinforcement Learning offers a framework for agents to learn from experience by trial and error, using rewards to guide behavior. Key algorithms include value-based methods like Q-learning (which learn action-value estimates) and policy-based methods like policy gradients (which optimize a parameterized policy directly). Deep RL further extends these ideas by using neural networks to handle complex, high-dimensional inputs, enabling landmark achievements in games and robotics. Because of its ability to learn sequential strategies and balance exploration vs. exploitation, RL is applied in diverse domains from video games and robot control to recommendation systems and autonomous vehicles.

For a beginner, the key takeaways are that RL is like training an agent (or “digital pet”) through rewards, and that algorithms like Q-learning and policy gradients provide ways to learn good behaviors without requiring the agent to be explicitly programmed for every situation.

Knowledge - Certification - Community