The Basic Ideas of Natural Language Processing

Natural Language Processing (NLP) is a branch of artificial intelligence that enables computers to process and understand human language, whether in written or spoken form. By leveraging techniques from machine learning, deep learning, and computational linguistics, NLP allows machines to analyze text, recognize patterns, and generate human-like responses, revolutionizing applications such as chatbots, search engines, and automated translation systems.

Introduction to Natural Language Processing (NLP)

Natural Language Processing (NLP) is a subfield of artificial intelligence (AI) that enables machines to understand, interpret, and generate human language. It bridges the gap between human communication and computational processing, allowing computers to interact with people in a natural and intuitive way. NLP brings together techniques from computational linguistics, machine learning, and deep learning to process and analyze large volumes of textual and spoken data, uncovering patterns and extracting meaning in ways that mimic human comprehension.

At its core, NLP involves breaking down human language into structured data that a computer can process. This requires addressing complex linguistic challenges, such as syntactic structure, word meanings, and contextual understanding. Advances in NLP have been driven by the development of sophisticated models capable of handling both syntax and semantics, enabling more accurate interpretations of text and speech. From rule-based systems to modern deep learning approaches, NLP continues to evolve, offering increasingly human-like interactions in various applications.

NLP plays a pivotal role in numerous real-world applications, enhancing user experiences and driving business efficiency. Its applications range from virtual assistants and chatbots, which facilitate seamless human-computer communication, to machine translation systems that break language barriers. Sentiment analysis tools enable businesses to gauge public opinion, while automated summarization techniques help distill large volumes of information into concise and meaningful insights. Furthermore, NLP powers intelligent search engines, voice recognition systems, and personalized recommendation engines, making it an indispensable technology in today’s digital landscape.

Key Techniques in NLP

NLP involves a series of computational techniques that help machines process and analyze natural language. These techniques include:

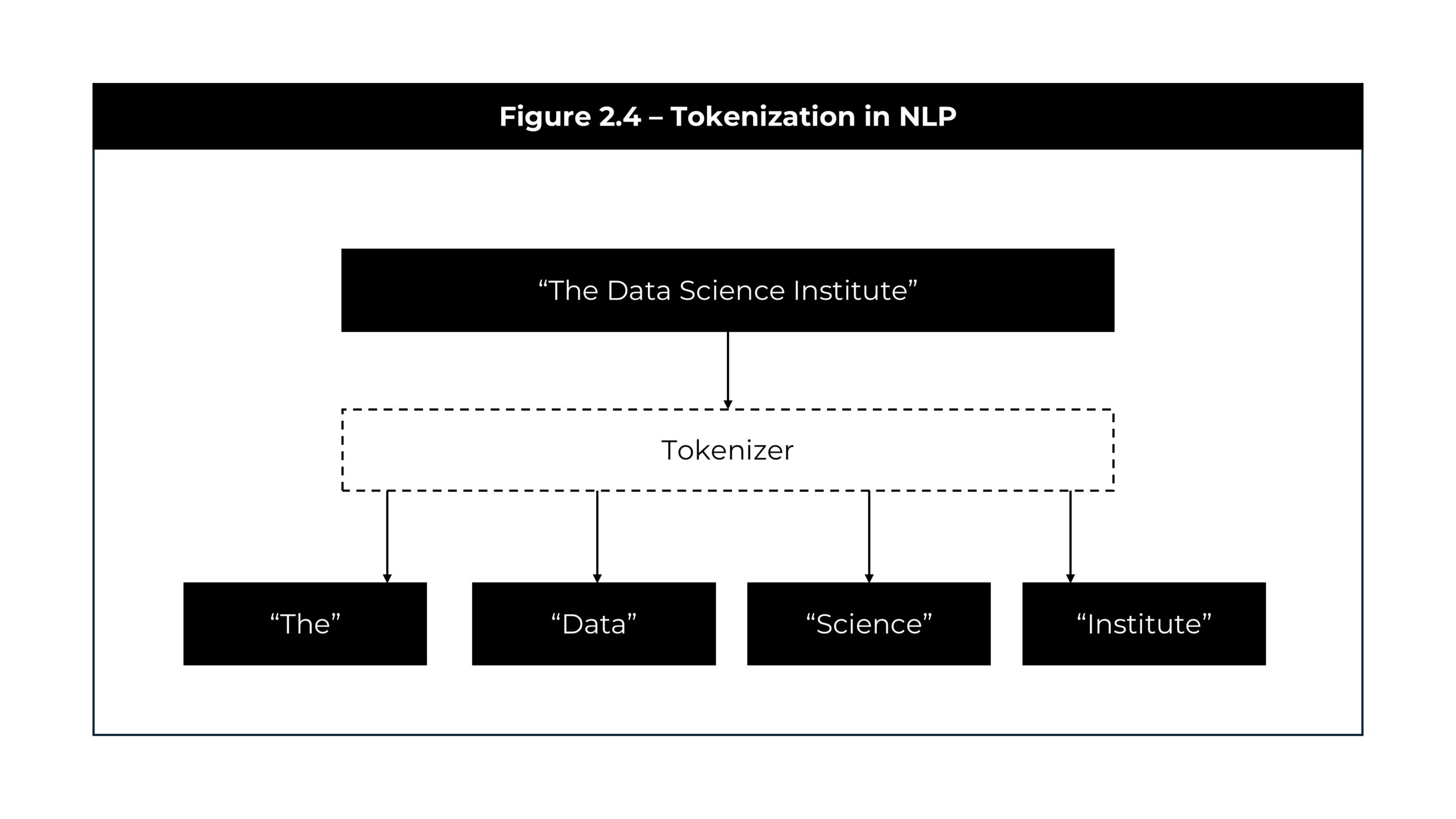

1. Tokenization

Tokenization is the process of breaking text into smaller units, such as words, sentences, or subwords. This is the foundational step in NLP, allowing subsequent processing tasks like parsing, named entity recognition, and machine translation. Tokenization can be classified into word tokenization, which separates text into individual words, and sentence tokenization, which divides a passage into distinct sentences. More advanced techniques, such as subword tokenization, enable handling of complex word formations and rare words, improving the robustness of NLP models.

2. Part-of-Speech (POS) Tagging

POS tagging assigns labels to words based on their grammatical category, such as noun, verb, or adjective. This information is crucial for understanding sentence structure and meaning, as well as for downstream tasks like syntax parsing and semantic analysis. Modern NLP systems leverage deep learning-based POS tagging methods that improve accuracy by considering contextual relationships between words rather than relying solely on rule-based approaches.

3. Named Entity Recognition (NER)

NER identifies proper names, dates, locations, and other specific entities within text. This technique is widely used in information retrieval, customer insights, and automated indexing. By recognizing names of individuals, organizations, and geographical locations, NER plays a significant role in applications such as news aggregation, personal assistant systems, and financial text analysis. Advanced NER models now integrate contextual embeddings to improve entity recognition across various domains and languages.

4. Parsing and Syntax Analysis

Parsing involves analyzing the grammatical structure of a sentence. Dependency parsing determines the relationships between words in a sentence, while constituency parsing identifies how words group together into phrases. These techniques are essential for text generation, sentiment analysis, and question-answering systems. Recent advances in deep learning, particularly transformer-based models, have significantly improved parsing accuracy, leading to more sophisticated language understanding capabilities.

5. Sentiment Analysis

Sentiment analysis determines the emotional tone of a text, identifying whether it is positive, negative, or neutral. It is widely used in social media monitoring, customer feedback analysis, and brand sentiment tracking. Advanced sentiment analysis models incorporate aspects such as sarcasm detection, aspect-based sentiment analysis (identifying sentiment about specific attributes of a product or service), and multimodal sentiment analysis (combining text, audio, and visual data for more accurate sentiment detection).

6. Machine Translation

Machine translation involves automatically converting text from one language to another. Neural Machine Translation (NMT) has significantly improved translation accuracy by using deep learning techniques such as sequence-to-sequence models and attention mechanisms. Unlike traditional rule-based and statistical translation methods, NMT generates more fluent and contextually appropriate translations by considering entire sentences rather than word-for-word substitutions. Current research in NLP is focusing on low-resource language translation and real-time, domain-specific adaptation.

7. Text Summarization

Text summarization extracts the most important information from a document while maintaining coherence and readability. There are two main types of summarization: extractive summarization, which selects key sentences from a document, and abstractive summarization, which generates a summary using natural language understanding. NLP-powered summarization is applied in news aggregation, legal document analysis, and academic research, significantly reducing the time required to process large volumes of text.

8. Speech Recognition and Text-to-Speech (TTS)

Speech recognition converts spoken language into text, enabling voice assistants, automated transcription services, and hands-free computing. Recent advances in deep learning, particularly recurrent neural networks (RNNs) and transformers, have improved speech-to-text accuracy across diverse accents and noisy environments. Text-to-speech (TTS) synthesizes human-like speech from text, enhancing accessibility for visually impaired individuals and improving user interaction in virtual assistants and customer service applications. Deep learning-based TTS models, such as WaveNet, produce natural-sounding speech with improved prosody and intonation.

Major Applications of NLP

NLP powers a wide range of real-world applications that enhance user experiences and business operations. These applications continue to evolve, offering increasingly sophisticated functionalities and benefits across various industries. Some of the most notable applications include:

Conclusion

Natural Language Processing is a transformative AI technology that enables machines to understand and interact with human language. Its applications span various industries, enhancing efficiency, customer experience, and decision-making while continuously evolving with advancements in deep learning and computational linguistics. However, challenges like ambiguity, bias, and computational demands must be addressed to unlock its full potential. Future developments in NLP, driven by ethical AI considerations and cutting-edge research, will shape the next generation of AI-powered language processing tools. These advancements will continue to redefine communication, automation, and information accessibility, which will be explored further in other articles.

Knowledge - Certification - Community