Hadoop Architecture Explained: Understanding Hadoop’s Layered Design

Hadoop architecture enables scalable and fault-tolerant Big Data storage and processing. This article explains Hadoop’s layered design—from HDFS and MapReduce to YARN and the broader ecosystem—and shows how each component works together to process massive datasets efficiently.

Hadoop architecture forms the backbone of one of the world’s most widely adopted open-source Big Data frameworks. It enables organizations to store, process, and analyze massive volumes of data across distributed clusters of computers in a reliable and cost-effective manner.

Originally inspired by Google’s Google File System (GFS) and MapReduce research papers, Hadoop emerged in 2005 when Doug Cutting and Mike Cafarella began developing an open-source alternative. Their objective was to make large-scale data processing accessible beyond technology giants and available to organizations of all sizes.

Since then, Hadoop has evolved into a mature platform used across industries such as finance, healthcare, telecommunications, retail, manufacturing, and government. At the heart of this success lies its layered architecture. Each layer serves a distinct function, yet all layers integrate seamlessly to deliver scalable, fault-tolerant, and high-performance data processing.

Understanding Hadoop architecture is essential for data professionals who design, manage, or operate modern Big Data environments.

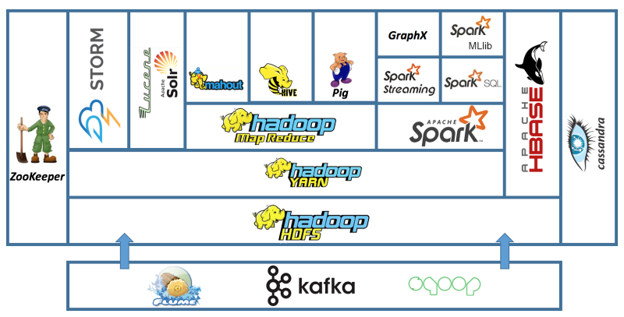

Core Layers of Hadoop Architecture

Hadoop architecture consists of several tightly integrated layers. Each layer addresses a specific aspect of data storage, processing, resource management, or system support.

Together, these layers form a complete ecosystem that transforms raw data into usable insights.

The primary layers include:

- Hadoop Distributed File System (HDFS)

- MapReduce Processing Framework

- YARN Resource Management

- Hadoop Common Libraries

- Hadoop Ecosystem Tools

Let’s examine each layer in detail.

Hadoop Distributed File System (HDFS)

HDFS is the storage foundation of Hadoop architecture. It is designed to store extremely large files across clusters of commodity hardware while providing high throughput and reliability.

Instead of storing files as single units, HDFS divides files into fixed-size blocks. These blocks are distributed across multiple machines in the cluster. To protect against hardware failure, HDFS replicates each block across different nodes.

Because of this design, data remains accessible even when individual servers fail.

Key characteristics of HDFS include:

- Distributed block-based storage

- Automatic replication for fault tolerance

- High throughput access for large datasets

- Optimized for sequential read and write operations

- Support for very large files

HDFS works particularly well for workloads that involve scanning large datasets, such as log analysis, machine learning training, and batch processing.

By providing reliable and scalable storage, HDFS enables organizations to retain massive volumes of raw data at relatively low cost.

MapReduce Processing Layer

MapReduce is Hadoop’s original processing engine. It provides a programming model that enables parallel computation across distributed data.

The model divides processing into two main stages:

- Map stage: Reads input data and converts it into key-value pairs

- Reduce stage: Aggregates and summarizes the intermediate results

This approach allows Hadoop to process large datasets by distributing work across many nodes simultaneously.

MapReduce offers several advantages:

- Parallel execution across clusters

- Automatic handling of failures

- Scalability for growing workloads

Although newer engines such as Apache Spark now dominate many workloads, MapReduce remains an important part of Hadoop architecture. Many legacy systems still rely on MapReduce for batch-oriented processing tasks.

Understanding MapReduce also helps professionals grasp the foundational concepts behind distributed data processing.

YARN (Yet Another Resource Negotiator)

ARN is the resource management layer of Hadoop architecture. It controls how cluster resources such as CPU and memory are allocated to applications.

Before YARN, Hadoop tightly coupled resource management with MapReduce. This limited Hadoop to a single processing model. YARN changed this design by separating resource management from processing logic.

With YARN, multiple processing engines can run on the same cluster.

YARN provides:

- Centralized resource management

- Application scheduling

- Cluster monitoring

- Support for diverse workloads

As a result, organizations can run MapReduce, Spark, Hive, and other engines on one Hadoop cluster.

This flexibility transforms Hadoop from a single-purpose batch system into a multi-purpose data platform.

Hadoop Common

Hadoop Common contains the shared libraries, utilities, and APIs used across all Hadoop modules.

These components include:

- Configuration management tools

- File system and I/O libraries

- Security and authentication services

- Network communication utilities

Although Hadoop Common operates behind the scenes, it plays a critical role. Without these shared services, the core layers could not function consistently.

Therefore, Hadoop Common acts as the glue that holds the Hadoop ecosystem together.

Hadoop Ecosystem Components

Beyond its core layers, Hadoop architecture includes a rich ecosystem of supporting tools. These tools extend Hadoop’s capabilities into analytics, data warehousing, streaming, and real-time processing.

Common ecosystem components include:

- Hive: SQL-based querying and data warehousing

- Pig: Scripting for data transformation

- HBase: NoSQL database for real-time access

- Spark: In-memory processing framework

- Oozie: Workflow orchestration

- ZooKeeper: Distributed coordination service

Together, these tools allow organizations to build complete data platforms on top of Hadoop.

Instead of relying on a single tool, enterprises combine ecosystem components to address diverse analytical needs.

Master–Slave Cluster Architecture

Hadoop clusters typically follow a master–slave design.

In HDFS:

- NameNode manages file system metadata

- DataNodes store actual data blocks

In YARN:

- ResourceManager controls cluster resources

- NodeManagers manage workloads on each node

This separation of responsibilities improves scalability and simplifies management.

Moreover, administrators can scale clusters by adding more DataNodes without major architectural changes.

Rack Awareness

Hadoop is aware of how nodes are physically organized within data center racks.

This awareness allows Hadoop to:

- Distribute replicas across racks

- Reduce network congestion

- Improve fault tolerance

If an entire rack fails, Hadoop can still access replicas stored elsewhere. Consequently, rack awareness strengthens reliability and performance.

Why Hadoop Architecture Matters

Hadoop architecture delivers several important benefits:

- Horizontal scalability

- High availability

- Cost-effective storage

- Support for multiple processing engines

- Flexibility for diverse workloads

Because of these advantages, Hadoop continues to serve as a foundational platform in enterprise Big Data environments.

Even as cloud-native platforms evolve, many architectural principles of Hadoop influence modern data systems.

Building Skills in Hadoop Architecture

Professionals who understand Hadoop architecture can design better data platforms, troubleshoot issues faster, and optimize performance more effectively.

To develop these skills, explore the DASCIN Enterprise Big Data Professional (EBDP®) and certifications under the DASCIN Enterprise Big Data Framework (EBDF).

These globally recognized programs offered through DASCIN cover Hadoop, Spark, data lakes, and enterprise-scale analytics. They provide practical knowledge that bridges theory and real-world implementation.

Conclusion

Hadoop architecture provides a layered, modular foundation for distributed Big Data storage and processing. By combining HDFS, MapReduce, YARN, Hadoop Common, and ecosystem tools, Hadoop transforms raw data into actionable insights at scale.

Understanding how these layers interact empowers professionals to build reliable, scalable, and future-ready data platforms.

As organizations continue to rely on data-driven strategies, Hadoop architecture remains a critical building block of modern enterprise analytics.

Knowledge - Certification - Community